As utilities move towards more digitization, the need for agility and the ability to solve complex business problems in a relatively short period of time has been ever increasing. Cloud platforms are built to help with some of these needs.

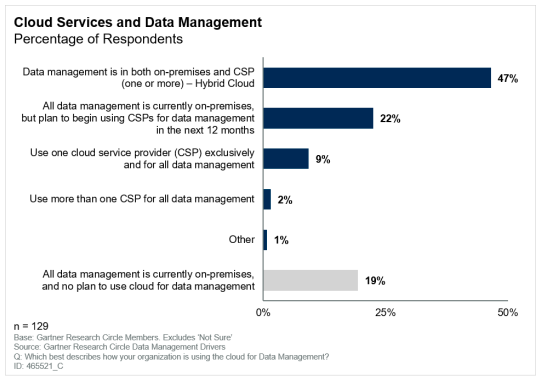

According to Gartner, companies are increasingly looking towards cloud for managing their data and server analytic use cases. “In 2018, 72% of the overall market DBMS growth was attributed to cloud, which comprised nearly a quarter of the overall DBMS revenue. Further, in a recent Gartner Research Circle Survey on data management strategy more than 80% indicated their intent to use or were already using cloud for data management.“*

Figure 1. Cloud Services and Data Management (Source – Gartner)

Figure 1. Cloud Services and Data Management (Source – Gartner)

Cloud vs. On-Premise

Although cloud platforms have numerous benefits over on-premise solutions, I want to highlight a couple of keys advantages below that are useful to utility organizations.

Infrastructure Planning

In a large utility organization, infrastructure planning is itself an arduous effort which can take months. Moreover, during the planning, the infrastructure provisioning must be kept in mind, even if it won’t be needed for several more years. This not only underutilizes the infrastructure for the initial years but also increases the risk of the hardware being outdated because of the technological advancements in the future.

Cloud technology helps us solve this problem, as hardware can be provisioned in a matter of minutes. Hardware can also be upgraded whenever required and sometimes with no downtime. Additionally, with the advent of serverless architecture there is no need to provision the hardware beforehand — provisioning can all be done by the cloud platform itself.

Machine Learning/ AI Use Cases

Complex business problems that are solved through machine learning/AI require the use of advanced hardware like Graphics Processing Units (GPU) or Tensor Processing Units (TPU). This hardware can be bought and installed on premise, but it is expensive and may be utilized only for special use cases. Instead, if we make use of the cloud, not only does implementation time decrease, but it will also reduce the overall cost of ownership.

Use Cases for Utilities

Below are examples of two uses cases that are ideal for cloud implementation:

Analyzing load forecasting data for rate cases

Traditionally load forecasting has been done by using aggregate instead of actuals data to reduce data volume for rate cases. With the deployment of AMI meters, utilities now have granular data— down to every 15 mins—for millions of meters, 24 hours a day. This creates a huge volume of data.

Sometimes regular Relational Database Management Systems (RDBMS) are not able to analyze such a large of volume of data or may have serious performance limitations. However, thanks to the highly scalable features of the cloud, you can upload data to cloud storage, which can help process data via a cloud native Hadoop cluster or a cloud data warehouse like AWS (Amazon Web Services) - Redshift, Google Cloud Platform (GCP) - BigQuery, etc. In addition, we can also utilize natively integrated machine learning capabilities in the cloud for creating weather response functions, which in the on-premise environment would require separate servers for R/SAS/Python, etc.

Real-time smart grid visibility and outage localization

One critical area for utilities is outage management: finding the outage cause, then turning the power back on for customers as soon as possible. This becomes even more critical during natural disasters. By analyzing outage management system and grid data, utilities can locate problems and localize the outages faster.

This use case requires real-time streaming and analyses of data. This is not inherently built into traditional databases, but it is possible to do on-premise by utilizing new technologies such as Apache Nifi, Spark etc. However, using a cloud platform for real-time streaming offers several advantages. First, cloud platform streaming technologies are tightly integrated with other things like databases, monitoring, debugging, etc. so it is relatively straightforward to implement the solution end-to-end. Second, due to the scalability of the cloud, it can handle extreme fluctuations in the input data volume seamlessly.

Generic Analytics Architecture in the Cloud

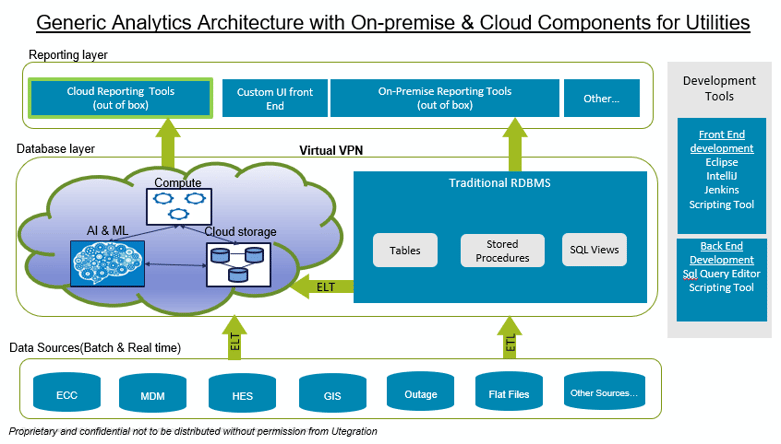

For most utilities in the initial stages of moving to the cloud, we envision and create a hybrid analytics environment where some data is migrated to the cloud for specific use cases while other existing analytics are still delivered by the on-premises data warehouse. However, over time, the environment becomes more cloud-dependent as the utility matures with cloud.

Fig 2. Typical hybrid on-premise and cloud architecture implemented in a utility. Actual architecture may change depending on the requirements and uses cases

Fig 2. Typical hybrid on-premise and cloud architecture implemented in a utility. Actual architecture may change depending on the requirements and uses cases

Extract Transform Load (ETL) or Extract Load Transform (ELT)?

One of the first steps for delivering analytics in the cloud is to load the data in from multiple sources. Traditionally, data is loaded via the ETL method to the on-premise data sources. Some of the key advantages of the ETL approach are that it minimizes data redundancy and saves on hardware storage space. However, since the storage cost in the cloud is relatively cheap, raw data can be loaded into the cloud first and then, by utilizing the scalability of compute engines, it can be transformed more efficiently. Also, raw data can be saved for archiving purposes at a relatively cheaper cost in the cloud. In a true Big Data analytics use case, you will also find that the performance of the ELT will be faster than ETL because transformation of data happens inside the database compared to a ETL tool. Therefore, we would generally prefer an ELT solution when loading data to the cloud.

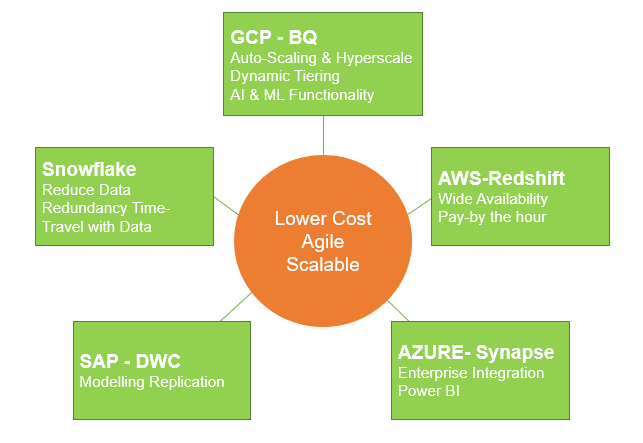

Cloud Vendors Comparison

In this section, I will talk briefly about some cloud providers that are strong competitors in the market.

Amazon Web Services (AWS) - Veteran in the market

AWS was one of the first enterprise cloud providers and currently has the largest availability zones worldwide. AWS is the oldest player in enterprise cloud with the largest footprint in the market. Newer cloud providers enter the market with more nuanced products by capitalizing on AWS’ minor shortfalls. Nevertheless, AWS is still the leader in market share and continues to grow its armada of products.

Redshift is their flagship analytics platform. Historically, Redshift coupled data and compute together, which meant there was a learning curve involved in correctly configuring your enterprise’s cluster. Newer nodes within Redshift have now enabled separating data and compute.

Some of the leading products from AWS include:

- Dynamo DB – NoSQL DB used primarily for large volumes of transactional data

- AWS Glue – fully managed cost effective ETL service

- S3 Storage Buckets – AWS one stop storage solution for archiving and storing data at cheaper cost

Google Cloud Platform (GCP) - Strategize once, always get the best performance

BigQuery, the flagship analytics product from GCP is a serverless, highly scalable and cost-effective database/data warehouse solution for most analytical use-cases and scenarios. BigQuery along with a host of products from GCP, will enable creating an end-to-end pipeline for analytics and data science a painless transformation.

BigQuery connects to most BI tools, platforms with out-of-the-box connectors and is easily customizable to connect to other third-party applications. Other GCP products include:

- Dataflow – for stream & batch processing of data

- Cloud Data Fusion – to build highly scalable ETL pipelines

- AI Hub – highly optimizable and/or simple plug and play AI & ML platform

- Cloud Dataproc – on-demand Hadoop cluster on GCP

- Data Studio & Looker – for visualization and exploratory analytics

BigQuery can be further leveraged to build highly efficient and cost-effective solutions for all your analytical problems. GCP offers various storage options and is one of the cheaper storage solutions currently in the market. There is a vast array of options based on the frequency and the Service Level Agreement (SLA) required while accessing the data. All of GCPs products come with Google’s promise for network security and easy enterprise management for roles, access and managing a hierarchy.

Microsoft Azure Synapse - Strong roadmap

Azure is Microsoft’s cloud data platform. Microsoft is no stranger to enterprise solutions. Your organization is probably using at least three of their products. This makes Azure a great option when you want to integrate cloud into your existing enterprise applications and security hierarchy. Microsoft 365 comes with a free subscription to integrate your enterprise’s active directory with Azure AD, making single-sign on with your on-premises environment a breeze. Azure also has a vast array of products. Its newest and flagship analytics product is the Azure Synapse which is a next generation of Azure SQL Data Warehouse. Synapse is still in its infancy phase as compared to BigQuery and Redshift. It’s currently not a serverless solution and does add a little overhead in terms of administration and architecting. One of the standout products within Azure is “Data Factory,” which is a service built to make ETL and ELT intuitive and easy to implement.

Azure’s integration with Power BI makes it really simple to visualize and explore data in Azure blob storage or housed in synapse. Some of the other products within Azure that are worth mentioning are:

- Azure Event Hub – Azure’s event messaging service

- Azure Functions – serverless compute for ad-hoc or event driven processes

- Azure Data Lake – one-stop solution for all data storage and archiving needs

- Azure plans to further integrate all their solutions with Synapse, making for string hybrid processing in the near future.

SAP - Cloud-based company powered by HANA

SAP made a strategic investment in in-memory database technology over a decade ago with HANA. Now SAP is pivoting to be a cloud-based company powered by HANA. The once overwhelming suite of BI and premise-based BW and HANA offerings are now greatly simplified with cloud-based offerings including:

- SAP Analytics Cloud – SAC is the cloud-based analytics environment with some planning and predictive capabilities

- SAP HANA Cloud – cloud-based HANA database platform with additional features for Data Lifecycle management of current and historical data including HANA Data Lake

There are two main data warehouse products offered by SAP in the cloud that can be used. First is the flagship BW/4HANA which retains all the capabilities of BW relating to support for complex security, SAP content, and simplified modeling with more openness to third-party analytic tools. Although the capabilities are well known, it is primarily an IT-centric solution and requires a specific skillset.

New for 2020 is SAP Data Warehouse Cloud (DWC) that more business focused for data engineers that may be part of the line of business and have some technical skills but are not IT. IT has more of a supportive /collaborative role as models can be centrally controlled if needed, but users/departments can set up their own “spaces” and scale up and ramp down to control costs. It also comes with SAC licenses so business and IT can collaborate in one environment. Further, it is completely open for third-party data and analytics tools as well.

A big value proposition for SAP DWC is that SAP source environments based on HANA—either cloud or on-premise—can be sourced virtually as remote connections. Not all your SAP data needs to be extracted to the cloud! However, business users can connect to other databases or data lakes and upload custom data sets and relate them in the cloud, with data governance capability if desired. While the concept of a federated data warehouse sounds great, one should be realistic about performance in production and SAP has provided capability to replicate tables if needed.

Interestingly, DWC is actually hosted by Amazon Web Services but can also connect to Google Big Query as a data source.

Snowflake - Keep it simple and efficient

That should probably be the motto of Snowflake, as that’s exactly what it does as a database/data warehouse. But it’s important to remember that it’s only a database/data warehouse. While it doesn’t bring a host of products like other cloud solutions, it does have easy and effective integration with a variety of products. Not to mention, by using jdbc/odbc you can practically connect it with any of your on-premise or other cloud tools.

Snowflake does need to be hosted on a cloud service (GCP, AWS, Azure), but your organization will only have to deal with Snowflake, which simplifies invoicing and security.

Snowflake has excellent performance and is highly scalable. It also separates data and compute entirely. You will need to choose the computational capacity required for your analytical needs but it can be scaled vertically and horizontally to ensure all your uses get the same Service Level Agreement (SLA). The compute is pay-as-you-use and it has an automatic termination timer, so you never have to worry about paying for unused service.

Snowflake has some unique features that make it an attractive data warehouse such as:

- Time-Travel – querying tables to view data at a certain point in time

- Zero-Clone copy – creating copies of tables without actually creating another copy of the data (these are not views)

These features make Snowflake more agile than other solutions, reducing the time to value for your use case or problem statement. Snowflake has an out-of-the-box connector to BI tools making the visualization and dashboarding a breeze. Apart from the encryption and security provided by the cloud provider you choose, Snowflake adds its own layer of encryption to ensure your data is always safe.

Conclusion

How do you know which is the right cloud solution for your enterprise?

In general, every cloud provider mentioned above can create an end-to-end pipeline to stream, transform and create a data warehouse to be used by your enterprise’s analytical and business teams. However, based on your specific needs and timeline, the cloud provider you choose can make a difference between a successful, scalable, streamlined & cost-effective solution and the opposite.

Here are some questions to consider to help decide which cloud solution best matches your needs:

- Is it a top priority to ensure your data streaming is never bottle-necked?

- Is cost your biggest concern?

- Is there a certain SLA you are trying to meet with the data?

- Is integration with external/on-premise applications a priority?

- Would your enterprise be willing to invest in resources to maintain applications?

If you would like to engage in a no-cost discussion and exchange of ideas about moving your utility operations to the cloud, I invite you to reach out to Utegration anytime. We are here to help.